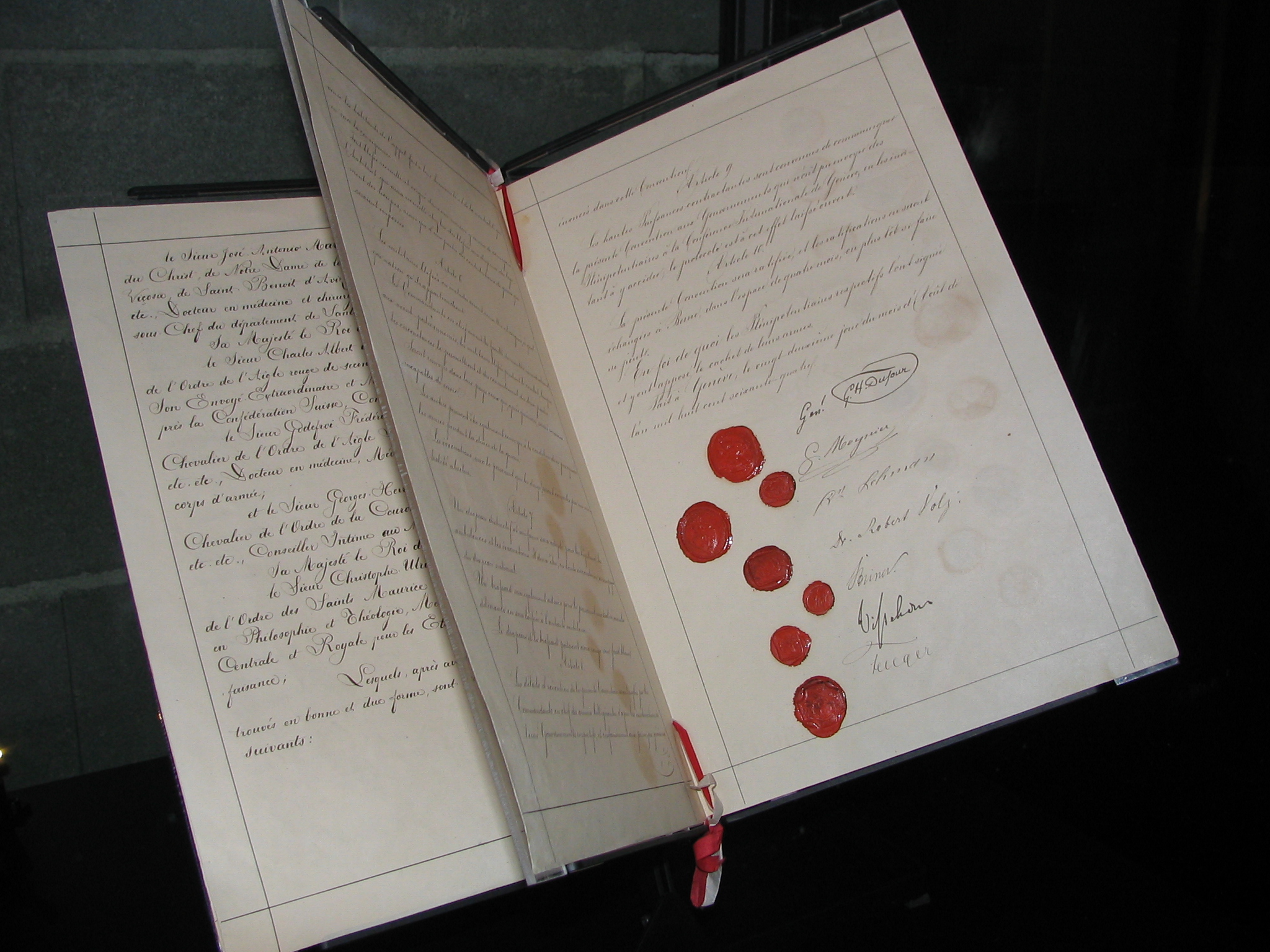

Groups and individuals concerned with international law and accountability as regards mistakes and warcrimes are on both sides of the fence as regards deployment of autonomous weapons technology. The ability to discriminate combatants from non-combatants, civilian objects from military objects, and act with force only upon military objects is necessary to fulfill the discriminatory requirements of the Geneva Conventions (pic of the original conventions above), as well as meet the grade as regards the principle of discrimination a’la Just War Doctrine. The Pentagon claims that the use of unmanned and semi-autonomous vehicles has already proved to reduce military and civilian casualties. Advocates of unmanned systems cite that autonomous machines more reliably observe international law than men because the former do not have a choice, nor are machines subject to distortions in decision making due to emotional incoherence or the 'fog of war'. Also, the laser-guided precision of autonomous weapons is said to be 'unparalleled' when compared to contemporary weapons such as crude cruise missiles. According to an American source, US airstrikes in Pakistan using Predator aircraft through September 2009 resulted in 979 total casualties, 9.6% of which were identified as civilians . According to a Pakistani source, of the 60 cross-border drone strikes carried out between January 2006 and April 2009, only 10 of these 60 strikes were able to hit value targets. These 10 strikes on the mark resulted in the elimination of 14 terrorists and 687 civilians. The other 50 strikes missed their mark due to reported intelligence failures and resulted in loss of 537 civilian lives . These figures, which paint different pictures, both are concerned with non-autonomous drone strikes, with men in the loop at all times. The wide divergence in reports gives reason to pause and consider the accountability factor.

Groups and individuals concerned with international law and accountability as regards mistakes and warcrimes are on both sides of the fence as regards deployment of autonomous weapons technology. The ability to discriminate combatants from non-combatants, civilian objects from military objects, and act with force only upon military objects is necessary to fulfill the discriminatory requirements of the Geneva Conventions (pic of the original conventions above), as well as meet the grade as regards the principle of discrimination a’la Just War Doctrine. The Pentagon claims that the use of unmanned and semi-autonomous vehicles has already proved to reduce military and civilian casualties. Advocates of unmanned systems cite that autonomous machines more reliably observe international law than men because the former do not have a choice, nor are machines subject to distortions in decision making due to emotional incoherence or the 'fog of war'. Also, the laser-guided precision of autonomous weapons is said to be 'unparalleled' when compared to contemporary weapons such as crude cruise missiles. According to an American source, US airstrikes in Pakistan using Predator aircraft through September 2009 resulted in 979 total casualties, 9.6% of which were identified as civilians . According to a Pakistani source, of the 60 cross-border drone strikes carried out between January 2006 and April 2009, only 10 of these 60 strikes were able to hit value targets. These 10 strikes on the mark resulted in the elimination of 14 terrorists and 687 civilians. The other 50 strikes missed their mark due to reported intelligence failures and resulted in loss of 537 civilian lives . These figures, which paint different pictures, both are concerned with non-autonomous drone strikes, with men in the loop at all times. The wide divergence in reports gives reason to pause and consider the accountability factor. Regardless of which report of civilian casualties is correct, and regardless of what definition was employed to define ‘civilian’ writ large, it is ambiguous which of the five plus personnel manning drones is responsible for mistakes that result in undue death. In war, collateral damage is inevitable, but there is an ethical and legal obligation to minimize loss of non-combatant life. Similarly, if, or when, the step is taken to employ fully autonomous weapons, the axis of accountability ought to be clear.

In his software architecture for autonomous systems, Arkin addresses the accountability issue through the design and integration of 'ethical governor' and 'responsibility adviser' modules. The former constrains the functioning of the system by encoding Laws of War and Rules of Engagement as rule sets that must be met by the robot prior to execution of force. The latter establishes a formal locus of responsibility for the use of any lethal force by an autonomous robot. According to Arkin, "this involves multiple aspects of assignment: from responsibility for the design and implementation of the system, to the authoring of the LOW and ROE constraints in both traditional and machine-readable formats, to the tasking of the robot by an operator for a lethal mission, and for the possible use of operator overrides" (Arkin, 2009). In this case, accountability is clear and human operators remain ‘in the loop’, as supervisors who serves in a fail-safe capacity in the event of system malfunctions.

In his software architecture for autonomous systems, Arkin addresses the accountability issue through the design and integration of 'ethical governor' and 'responsibility adviser' modules. The former constrains the functioning of the system by encoding Laws of War and Rules of Engagement as rule sets that must be met by the robot prior to execution of force. The latter establishes a formal locus of responsibility for the use of any lethal force by an autonomous robot. According to Arkin, "this involves multiple aspects of assignment: from responsibility for the design and implementation of the system, to the authoring of the LOW and ROE constraints in both traditional and machine-readable formats, to the tasking of the robot by an operator for a lethal mission, and for the possible use of operator overrides" (Arkin, 2009). In this case, accountability is clear and human operators remain ‘in the loop’, as supervisors who serves in a fail-safe capacity in the event of system malfunctions.Despite the need to establish a clear axis of accountability, and despite so many military professionals saying that “humans will always be in the loop”, it is ambiguous what constitutes ‘the loop’. Plus, there is no official commitment preventing autonomous weapons so, until there is, all the in the loop talk is hearsay. To boot, in 2005, the Joint Forces Command drew up a report entitled “Unmanned Effects: Taking the Human Out of the Loop”, and in 2007, the U.S. Army put out a Solicitation for Proposals for a system that could carry out “fully autonomous engagement without human intervention”. Clearly, the military is interested in at least researching autonomous weapons. It would be worthwhile if there was no less than equal interest in addressing the legal and accountability concerns.

No comments:

Post a Comment